Key takeaways

Voice assistants, generative AI, and increasingly, AI agents are becoming integrated into our daily lives and into business decision-making processes.

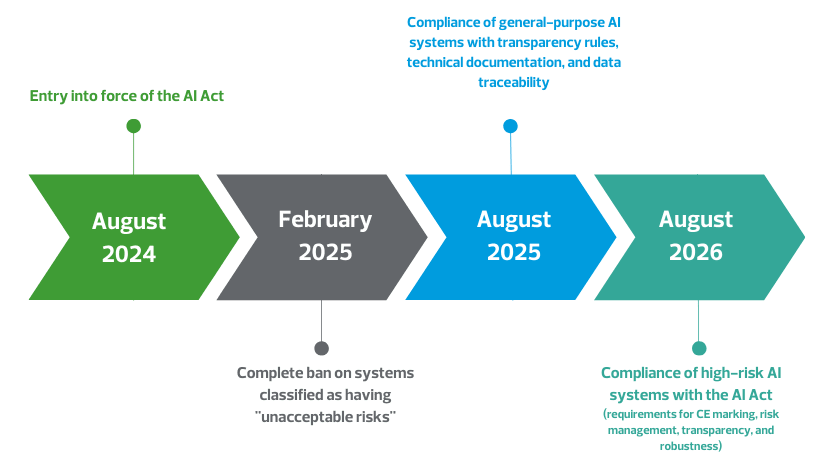

Artificial intelligence (AI) is transforming our societies in unprecedented ways. Given the scale of these challenges, the European Union has adopted the AI Act, the world’s first legal framework specifically targeting AI, which came into effect on August 1, 2024. Full implementation of the AI Act will occur gradually over several years to allow businesses to adjust to the new requirements. The main milestones are as follows:

The AI Act aims to establish clear and ethical rules for the responsible development and use of AI within the European Union. By imposing strict standards, the AI Act fundamentally changes the landscape for businesses, especially concerning transparency, security, and the protection of citizens’ rights. This regulation is not merely a framework to follow: it requires a new vision for businesses, who must adapt their models and processes to integrate ethical and transparent AI.

Companies that anticipate these milestones now by incorporating the AI Act standards into their strategy and practices will have a significant market advantage: a head start in terms of reputation and competitiveness by positioning themselves as leaders in responsible and ethical AI.

Our RSM experts offer insights into the essential adaptation strategies businesses need to adopt in response to this new directive.

AI Act : The Challenge of Business Compliance

What are the implications for AI systems?

The AI Act introduces substantial constraints for businesses, particularly those using high-risk AI systems. The regulation imposes strict standards of transparency, security, and ethics that require businesses to review their existing practices and infrastructures in-depth.

Companies that developed their own AI software before the adoption of the AI Act must now adapt them to comply with the new requirements.

Achieving compliance may require modifying existing algorithms, restructuring data, and ensuring that AI-driven decisions respect fundamental rights and user privacy.

For example: an automatic recruitment system must prove that it does not introduce discriminatory biases. Similarly, a decision support tool in healthcare must demonstrate its robustness and reliability to minimize risks to patients.

For many businesses, this requires careful analysis and a comprehensive audit of their current systems, which may require significant investments and pose a considerable challenge, particularly for tech startups with limited resources.

Key Obligations

To ensure their products comply with European standards, companies will now need to obtain CE marking. This marking requires the company to provide detailed technical documentation, including a description of the AI system, its features, and the integrated security measures.

In addition to this marking, companies will need to register the AI systems they develop and/or use in a European database accessible to regulators.

Transparency and traceability are also essential.

For example: companies must be able to document each decision made by the AI and justify it, which requires thorough record-keeping.

Furthermore, they must ensure human oversight to monitor the functioning of AI systems, especially those classified as high-risk by the regulation.

Note: an AI system is considered "high-risk" if it is present in certain medical or educational devices.

This traceability requirement involves implementing continuous monitoring systems to detect and correct any AI errors or deviations, which may necessitate significant technological adjustments.

Human and Financial Investments

Complying with AI Act standards represents a significant financial investment, especially for companies that use AI intensively. Financially, they will need to allocate budgets for obtaining necessary certifications, adapting their information systems, and establishing new governance and monitoring processes.

In terms of human resources, achieving compliance with the AI Act requires ongoing employee training to raise awareness of the implications of the regulation and the risks associated with AI use.

For example: training sessions on algorithmic biases, data security, and privacy management will be essential for technical and managerial teams.

Companies will also need to recruit, train, and develop AI governance experts, such as AI compliance officers or risk managers, who will be responsible for ensuring that systems meet the regulatory requirements.

This investment in skills allows businesses not only to stay compliant but also to anticipate future regulatory and technological developments. By strengthening their expertise in responsible AI, they can avoid non-compliance penalties while gaining competitiveness in a market that increasingly values ethical and transparent practices.

AI Management: Governance and Reorganization at the Heart of the Issues

A Global Dynamic

The AI Act is part of a global movement aimed at establishing responsible governance for digital technologies and artificial intelligence. International initiatives such as the UNESCO Recommendation on AI Ethics, adopted in November 2021 by 193 member states, or the Global Digital Compact, adopted in September 2024, emphasize the importance of an ethical and transparent framework for these technologies. These efforts converge towards a universal recognition of the risks and opportunities related to artificial intelligence.

In addition to the AI Act, the European Union launched the AI Pact, a voluntary cooperation program to help businesses transition to compliance. This pact, structured around two pillars, plays a crucial role in preparing actors for regulatory requirements:

- Information Sharing and Exchange: The AI Pact network enables companies, organizations, and regulators to share best practices and better understand the implications of the regulation;

- Encouraging Voluntary Commitment: The pact facilitates the implementation of AI Act measures even before they become mandatory, offering companies tools and resources to plan their compliance.

Through the AI Pact, companies can anticipate legal requirements while benefiting from a collaborative environment to innovate responsibly. This initiative is also seen as a strong signal sent by the EU to its international partners, affirming its leadership in AI regulation. By encouraging voluntary commitments, the AI Pact complements the mandatory framework of the AI Act and reflects a desire to combine flexibility with rigor in AI governance.

Thus, by strengthening ties between various actors—public or private, local or international—the European Union is positioning AI as an engine for ethical and competitive growth, while inspiring a global dynamic for sustainable innovation.

AI: What Governance and Governability?

The AI Act therefore imposes AI governance based on monitoring, continuous evaluation, and transparency. Companies must ensure that their AI systems are "governable," meaning they:

- Are under human control;

- Can be adjusted or stopped in case of inappropriate behavior.

This requires integrating supervision and regulation tools that ensure AI remains aligned with the company's goals and values. Some platforms provide guidelines on governance to ensure successful AI system integration.

Effective governance relies on comprehensive documentation.

For example: for a product recommendation system, the company must be able to justify why a specific user received a recommendation and demonstrate that the choice is free of discriminatory biases. This requires establishing algorithm verification protocols, detailed technical documentation, and regular audits to maintain strict control over the functioning of the AI.

These transparency and governability requirements impose a level of rigor that was not always present in many companies' practices, particularly those that had not yet made a habit of documenting and controlling their AI so precisely.

What Impact on Decision-Making Processes and What Role for Executive Committees?

The AI Act goes beyond technical adjustments: it requires a profound transformation in decision-making processes within companies. With this regulation, businesses must integrate ethics, transparency, and accountability into their core values, especially to reassure clients and partners about the integrity of their AI technologies.

Implementing the AI Act largely depends on the commitment of executive committees, which play a crucial role in developing and monitoring a coherent and compliant AI strategy. This could involve appointing AI compliance officers—specialists who oversee AI ethical rules and security standards within the organization. These officers could be Data Protection Officers (DPOs) or Chief AI Officers, depending on the size of the company and the complexity of its AI activities.

The executive committee is also tasked with creating a work environment that fosters collaboration between various teams, notably technical teams (developers, data scientists), business units, and legal, audit, internal control, and compliance teams. These groups must work together to anticipate risks and ensure continuous monitoring of AI systems. This governance model thus enhances internal transparency and enables better adaptation to regulatory requirements.

The Integration of "Digital Factories"

digital and AI solutions—is also one of the options to facilitate compliance. These internal entities help ensure stricter control of AI applications by making sure that each stage of design, testing, and deployment adheres to the transparency and ethical standards set by the AI Act.

"Digital factories" centralize the skills and resources needed to develop and supervise AI within the company, creating a governance framework that guarantees consistency in compliance practices. These units can also serve as innovation labs, allowing AI solutions to be tested securely while ensuring the required documentation and traceability. By deploying such structures, companies demonstrate their commitment to ethical and responsible AI while preparing for future regulatory developments.

AI Act: Anticipation and Risk Mapping

Risk Mapping and Updates

One of the fundamental requirements of the AI Act for businesses is to create a risk map for their AI systems. This mapping is a crucial exercise for identifying and analyzing the specific risks that each AI model may pose, whether in terms of security, ethics, or the respect of fundamental rights.

Regular updates to this mapping are also essential to ensure ongoing compliance. With the constant evolution of technologies and regulations, the risks associated with AI can change rapidly. Therefore, businesses must ensure that this mapping is dynamic and adapts to new challenges—whether regulatory or technological—as they emerge.

This approach ensures essential responsiveness to stay compliant while minimizing negative impacts from AI systems on users and stakeholders.

Long-Term Strategic Vision

The AI Act encourages businesses to adopt a long-term strategic and technological vision to anticipate risks and make the most of AI while staying compliant. To ensure their survival in a constantly evolving technological environment, businesses must develop an AI strategy that considers both future opportunities and risks. This includes regular regulatory and technological monitoring to stay informed about AI developments and potential legislative changes.

This long-term vision also implies investments in technologies that facilitate rapid adaptation to the new AI Act requirements.

For example: companies could adopt predictive analysis tools to identify risks before they affect users or clients.

A proactive and anticipatory approach allows businesses to prepare effectively for future developments, thus strengthening their resilience and competitiveness in the market.

Skills Management and Continuous Training

Businesses are required to develop and train specific skills to anticipate and manage the risks associated with artificial intelligence. This training is essential for raising awareness among employees about the various aspects of AI, such as detecting biases, managing data security, and protecting individual rights.

To meet the requirements of the AI Act, businesses must train their teams on best practices in AI governance, so that everyone involved with AI has a clear understanding of the obligations and risks involved.

Continuous training programs are particularly recommended for employees working directly with AI systems, such as developers, data scientists, and compliance officers. These programs ensure that employees maintain adequate skills in the face of constantly evolving technologies and regulations. In addition to internal training, some companies choose to organize AI awareness workshops for cross-functional teams (e.g., marketing or customer service departments) so they understand how AI influences their activities and how to react in case of ethical issues or non-compliance.

The AI Act encourages companies to adopt AI aligned with European values, focused on transparency and respect for user rights. Companies have a role to play in promoting ethical and responsible AI to help build a trusted digital space. This regulation thus represents a powerful lever to ultimately strengthen businesses' competitiveness and resilience in an increasingly technology-driven environment. By taking the compliance turn and adapting to new requirements today, European companies solidify their place in a demanding international market while affirming their commitment to sustainable and ethical innovation.