The remarkable capabilities of AI bring substantial responsibilities for businesses, especially in managing the risks to Environmental, Social, and Governance (ESG) principles. Under the EU's Corporate Sustainability Reporting Directive (CSRD), companies are required to disclose the social and environmental risks of their operations, along with their impacts on people and the planet. Therefore, as AI becomes increasingly integral to business operations, organizations utilizing any form of AI must identify and evaluate the corresponding potential risks. In this article, we briefly explore the spectrum of risks introduced by AI that enterprises must navigate and report on within the framework of the CSRD.

This article was written by Cem Adiyaman ([email protected]) and Sefa Gecikli ([email protected]), who are part of RSM Strategy team with a strong expertise in digital law.

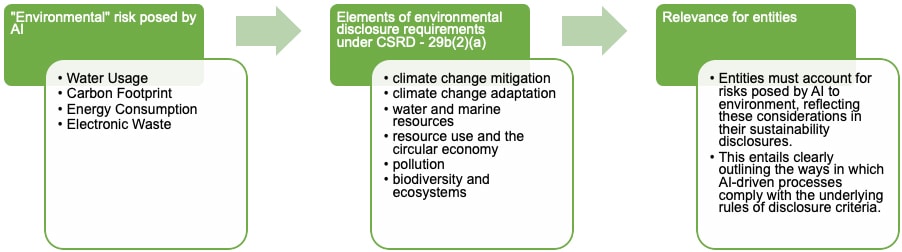

Assessing the Environmental Footprint of AI

AI's capability to streamline operations and optimize energy usage is tempered by its own considerable environmental impact. AI servers consume a significant amount of energy, generating substantial heat in the process. To prevent overheating, data centers often rely on cooling towers and large quantities of water to transfer the heat away from the servers. These servers, tasked with training and operating complex AI models, are incredibly power-intensive. Each server can use as much electricity as an average household, leading to substantial overall energy consumption.

In 2019 a study highlighted the energy required to train widely-used natural language processing (NLP) models. It is estimated that the carbon footprint of training a single advanced AI model was equivalent to the carbon dioxide emissions from about 125 round-trip flights between New York and Beijing. Furthermore, AI innovation is accelerating the turnover of electronic equipment. Cutting-edge server chips quickly render earlier models obsolete, showing AI’s contribution to electronic waste.

The substantial energy requirements and rapid obsolescence associated with AI technologies present significant environmental risks, necessitating greater transparency through environmental disclosures mandated by the CSRD for businesses using any form of AI.

Assessing the Social Footprint of AI

The advent of AI has brought with it a host of benefits, but also a range of "Social and Human Rights" risks that cannot be ignored. One of the most harmful of these is the spread of bias and discrimination. AI systems, particularly those involving machine learning, inherit the biases present in their training data. If the data reflects historical inequalities or societal biases, or lacks of enough level of inclusivity, the AI system will perpetuate and potentially amplify these biases. For instance, AI used in recruitment may favour candidates from a certain demography if the training data does not encompass a diverse range of successful profiles.

Privacy erosion is another critical issue for businesses, as AI systems rely on processing vast amounts of personal data to produce outputs. The social impact of this erosion can affect both internal stakeholders, like employees, and external parties, including customers. Another aspect, security risks of AI, may arise from its deployment in military items or criminal activities, or from the malfunctioning of AI-driven systems.

The elements of fundamental rights impact assessment (FRIA) for high-risk AI systems under the upcoming EU AI Act can guide businesses through their reporting obligations under CSRD. This includes assessing which categories of persons or groups are affected by the AI systems, identifying specific potential risks of harm, and detailing the measures taken to mitigate these risks. Additionally, it encompasses describing the human supervision integrated into the AI system and outlining the remediation strategies for any risks that materialize. Collectively, these components may provide ample material for thorough and compliant reporting under CSRD as well.

Assessing the Governance Footprint of AI

AI can lead to issues like generating incorrect outputs ('hallucination' and false positives) and offering little insight into its decision-making process (a 'black box' nature), challenging the transparency and accountability expected by various stakeholders. Incorrect outputs from AI systems can lead to misguided decision-making and strategy formulation, potentially resulting in financial loss, reputational damage, or legal liabilities. For example, if an AI system incorrectly identifies trends and the company acts on these false positives, it could invest in the wrong initiatives, impacting shareholder value. The opaque nature of AI also poses a significant risk to the transparency required for sound governance. Stakeholders, including shareholders, regulators, and the public, demand clarity on how decisions are made, especially if they have widespread implications.

Forward Thinking

AI has emerged as a top concern, ranking among the threats outlined in the Netherlands' national security strategy and spotlighted by the EU Commission as one of the tech sectors with the highest risk potential. This recognition underscores the pressing need to meet ESG standards. The forthcoming EU AI Act will dictate the terms for AI use, but companies must also pinpoint and communicate the AI-related risks within their operations under the CSRD framework.

In the landscape of artificial intelligence, forward-thinking businesses are called to not only harness the transformative power of AI but also to navigate its multifaceted impacts with foresight and responsibility. Embracing sustainable AI practices necessitates a commitment to minimizing the environmental footprint through energy-efficient technologies and renewable energy sources, ensuring AI systems are designed with inclusivity and fairness at their core to combat bias and discrimination, and enhancing transparency and accountability in AI governance to maintain stakeholder trust. By integrating these principles, companies can lead the way in demonstrating that technological advancement and ethical stewardship can go hand in hand, setting a benchmark for innovation that respects our planet, upholds human rights, and fosters trust in the digital age

With a team of experts in technology, digital law, and ESG frameworks, RSM NL is your navigator through the intersection of the AI and ESG. We provide clear, actionable insights to ensure your AI integration aligns with the latest regulations and ethical standards, turning potential risks into informed opportunities. Let us guide your journey toward sustainable innovation, helping your business to thrive responsibly in an AI-driven future.